I'm a Creativity Researcher. I'm Not Impressed With GenAI

People create in a completely different way.

My job is to study how people and groups create. I use that research to make recommendations about how we can create more consistently and more effectively. GenAI doesn’t help me. That’s because the way GenAI creates is nothing like the way people create. GenAI can make things that look as good as what people make (and yes, I’m impressed with that) but those programs make those things using different processes and algorithms. And there are important aspects of human creativity that GenAI doesn’t even attempt to replicate.

Generative AI isn’t new—it’s been around for more than fifty years. This column is a new version of an essay that I first published in 2006, and then revised in 2012, as a chapter in my book Explaining Creativity: The Science of Human Innovation. I called it “artificial creativity,” modeled on “artificial intelligence.” The term “generative AI” hadn’t been invented yet! Almost everything in this essay from 2006 is still true of today’s GenAI systems. That’s why I still call them “artificial creators.”

I’ll tell you about computer programs from the 1970s through the 1990s that paint, compose music, and write poetry. I’ll describe their dramatic limitations and I’ll explain why they don’t help us understand human creativity. Those limitations are still true of GenAI today. Read to the end to learn why GenAI systems—artificial creators—aren’t much help if you want to understand human creativity.

Artificial Creators

In October 1997, an audience filed into the theater at the University of Oregon and sat down to hear a very unusual concert. A professional concert pianist, Winifred Kerner, was performing several pieces composed by Bach. Well, actually, not all of the pieces had been composed by Bach, although they all sounded like they were. This was a competition to determine who could best compose pieces in Bach’s style, and the audience’s task was to listen to three compositions, one by Bach and the others by two different composers, and then vote on which was the real thing. Yet this information age competition had a novel twist: the audience had been told that one of the composers was a computer program known as EMI (Experiments in Musical Intelligence) developed by David Cope, a composer and a professor of music at the University of California, Santa Cruz (Cope, 2001). The audience knew that of the three compositions, one would be an original Bach, one would be a composition by Steve Larson—a professor of music theory at the University of Oregon—and one would be by EMI. For each of the performances, the audience had to choose which of the three composers had generated it.

The audience first voted on Professor Larson’s composition. He was a little upset when the audience vote was announced: they thought that the computer had composed his piece. But Professor Larson was shocked when the audience’s vote on EMI’s piece was announced: they thought it was an original Bach! After the concert, Professor Larson said “My admiration for [Bach’s] music is deep and cosmic. That people could be duped by a computer program was very disconcerting” (quoted in Johnson, 1997, p. B9).

Back in 1962, the famous cognitive scientists Allen Newell, J. C. Shaw, and Herbert Simon predicted that “within ten years a computer will…compose music that is regarded as aesthetically significant” (1962, p. 116). They weren’t just bluffing; already by 1958, they’d written a computer program for the ILLIAC computer that composed music using Palestrina’s rules of counterpoint, and the composition was performed and recorded by a string quartet (p. 67). Thirty-five years later, at the University of Oregon, their prediction finally seemed to come to pass.

The first computer was conceived by Charles Babbage in the 19th century; he called it the Analytical Engine. His complicated contraption of gears, pulleys, and levers was never built, but it caused a lot of Victorian-age speculation. Babbage’s friend Ada Augusta, the Countess of Lovelace, famously wrote that “the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent . . . [but] the Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform” (Augusta, 1842, notes A and G).

Until ChatGPT was released in 2022, most of us didn’t believe that computers could be creative. Now, when we read brilliant essays written by ChatGPT, or we see the images it’s generated at our command, we can’t deny that it’s creative. Most of us know by now that ChatGPT is “trained” on all of the text on the entire Internet. No single person created ChatGPT. But what about a program like EMI, that was written by a single programmer, David Cope? If EMI seems creative, then many of us would attribute that creativity to programmer David Cope. After all, the computer is only following the instructions that Cope provided in his line-by-line program. Computer creativity is incompatible with the Romantic and New Age idea that creativity is the purest expression of a uniquely human spirit, an almost spiritual and mystical force that connects us to the universe. In 2012, when I published the second version of this essay, very few books about creativity research contained anything about these programs. When this essay was first published in 2006, it was the first to analyze artificial creators from the perspective of a psychologist studying creativity.

In the 1990s, artificial intelligence programs were very different from ChatGPT and Perplexity. Today, those 1990s approaches are called cognitive AI, in contrast with today’s machine learning. Although machine learning gets all the attention, some computer scientists are still working with cognitive AI. Those researchers generally conceive of creativity as a set of mental operations on a store of mental structures. For example, Dasgupta’s (1994) computational theory of scientific creativity describes creativity solely in terms of (1) symbolic structures that represent goals, solutions, and knowledge and (2) actions or operations that transform one symbolic structure to another such that (3) each transformation that occurs is solely a function of facts, rules, and laws contained in the agent’s knowledge body and the goal(s) to be achieved at that particular time. (Dasgupta, 1994, p. 39)

Today’s generative AI systems use very different algorithms from cognitive AI. But they’re still algorithms. The claim that creativity is algorithmic flies in the face of our beliefs that creativity is mystical, insightful, and inspired.

The Artificial Painter: AARON

Harold Cohen was an English painter with an established reputation when he moved to the University of California, San Diego in 1968 for a one-year visiting professorship. He worked with his first computers there, and after the experience he chose to stay in the United States and explore the potential of the new technology. In 1973, while Cohen was a visiting scholar at Stanford University’s Artificial Intelligence Laboratory, he developed a program that could draw simple sketches. He called it AARON (see Figure 1). In the decades since, Cohen has continually revised and improved AARON. AARON and Cohen have exhibited at London’s Tate Gallery, the Brooklyn Museum, the San Francisco Museum of Modern Art, and many other international galleries and museums, and also at science centers like the Computer Museum in Boston (McCorduck, 1991).

FIGURE 1. Harold Cohen, AARON, KCAT, 2001, Whitney Museum of American Art

Each night, he programs AARON to generate 150 pieces; he selects the best 30, and then decides to print about five or six (Cohen, 2007). Cohen thinks of AARON as “an apprentice, an assistant, rather than as a fully autonomous artist. It’s a remarkably able and talented assistant, to be sure, but if it can’t decide for itself what it wants to print…then, clearly, it hasn’t yet achieved anything like full autonomy” (Cohen, 2004).

In 2006, when I published the first edition of my book Explaining Creativity, Harold Cohen’s system was the best-known and most successful example of a computer program that draws by itself. In the 1990s, an ever-growing number of artists were making electronic art (see Candy & Edmonds, 2002). Cohen trained AARON to create drawings by using an iterative design process; at each stage, Cohen evaluated the output of the program, and then modified the program to reflect his own aesthetic judgment about the results. We can see the history of this process in his museum exhibits: AARON’s earlier drawings are primarily abstract, its later drawings become more representational (like Figure 1).

Starting in 1986, Cohen worked at getting AARON to draw in color. He developed a “rule base” for how it would develop a complete internal model of which colors would go where. AARON’s design benefits from Cohen’s years of expertise: “nothing of what has happened could have happened unless I had drawn upon a lifetime of experience as a colorist. I’ve evidently managed to pack all that experience into a few lines of code, yet nothing in the code looks remotely like what I would have been doing as a painter” (Cohen, 2007).

Some would say that AARON isn’t creative, for the same reason that Lady Lovelace gave: because the programmer provides the rules. Cohen agrees that AARON doesn’t demonstrate human creativity because it’s not autonomous: an autonomous program could consider its own past, and rewrite its own rules (Cohen, 1999). AARON doesn’t choose its own criteria for what counts as a good painting; Cohen decides which ones to print and display. In one night, AARON might generate over 50 images, but many of them are quite similar to one another; Cohen chooses to print the ones that are the most different from one another (personal communication, January 14, 2004). To be considered truly creative, the program would have to develop its own selection criteria; Cohen (1999) was skeptical that this could ever happen. Cohen’s process of creating AARON fits in well with what psychologists have learned about the creative process: his creativity was a continuous, iterative process, rather than a sudden moment of insight or the creation of a single brilliant work.

You might say that the drawings aren’t by AARON, that they’re really by Cohen—Cohen, along with a very efficient assistant. Cohen (2007) agreed that the paintings are his: “I’ll be the first artist in history to have a posthumous exhibition of new work,” and he’s called AARON his “surrogate self” (2007). But attributing ownership and authorship to Cohen is a little too simplistic; it doesn’t help us to explain the creative process that generated these drawings. As Cohen pointed out, “it makes images I couldn’t have made myself by any other means” (2004). To explain how these drawings and paintings were created, we have to know a lot about the program, and about how AARON and Cohen interacted over the years.

Artificial Writers

For over 50 years, in fact for centuries, computer scientists have attempted to write programs that can write literature or poetry (Sharples & Pérez, 2022). They learned early on that programs that write poetry were more successful than programs that write prose. It isn’t because poetry is easier to write; it’s because human readers are used to reading meaning into ambiguous poems. In other words, when we read a poem, we expect to be doing a lot of interpretive work, providing much of the meaning ourselves. As a result, the program doesn’t have to be so good at writing meaning into the poem (Boden, 1999, p. 360).

The earliest story-writing programs focused on creating interesting plots, with characters that have motivations, and actions that make sense in the context of those motivations. That’s because the technology wasn’t good enough to write convincing sentences. These plot-writing programs—like the pioneering TALE-SPIN program (Meehan, 1981)—start with scripts that represent stereotypical behavior, and character motivations and their likely resulting actions, including help, friendship, competition, revenge, and betrayal. If you’re interested in learning more, listen to my podcast interview with Mike Sharples, an early pioneer in computer story writing.

TALE-SPIN represents character motivations, but it has no concept of an overall narrative structure, or how those goals can best fit together to make an interesting story. It generates stories, but it has no ability to evaluate the resulting stories, or to modify them to satisfy some aesthetic criteria. A 1990s program known as MINSTREL (Turner, 1994) made a distinction between the overall goal of a good story and the goals of the characters; a character’s goals may be rejected if they don’t fit into the overall structure of the narrative. MINSTREL relied on 25 transformative rules called TRAMS, for Transform, Recall, Adapt Methods. Some of the most common TRAMS are “ignore motivations” and “generalize actor”; one of the less common TRAMS was “thwart via death.” MINSTREL’s stories weren’t great, but they weren’t horrible, either; when people were asked to judge the quality of the stories—without knowing that they were computer generated—they usually guessed that the author was a junior-high-school student.

MINSTREL had an extra stage of evaluation that’s not present in TALE-SPIN. TALE-SPIN used the characters’ different motivations to construct plots, but those motivations often don’t fit together to make a coherent narrative. But MINSTREL fixed this problem with an extra stage of evaluation; motivations were created for each character, but then MINSTREL evaluated them all to see which ones worked best to make a coherent narrative. MINSTREL never did have a final evaluation stage—it didn’t have the ability to examine the stories that it generated to determine which ones were the best.

The Artificial Orchestra

Today’s GenAI systems seem like they might replace the individual human creator. But some AI programmers start with the observation that creativity isn’t always a property of solitary individuals; collaborating groups are sometimes more intelligent working together than individuals working alone. This is reflected in the field of distributed artificial intelligence, “DAI” for short, in which developers program many independent computational entities—called agents—and then let them loose in artificial societies to interact with one another. What emerges is a form of group intelligence called distributed cognition.

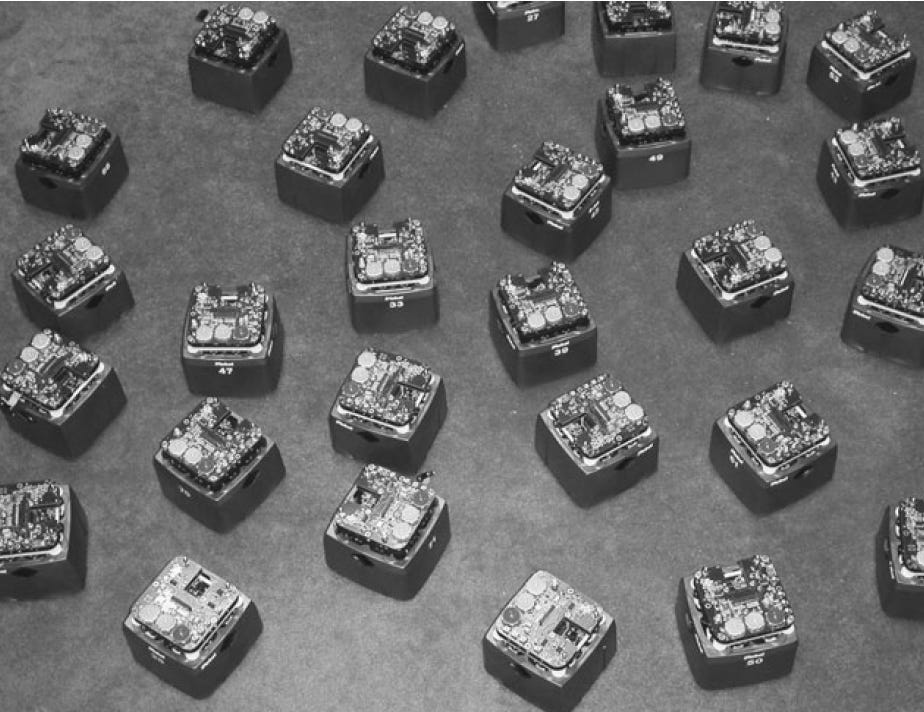

This field originated in the 1990s, and in the years since then, these techniques are being used to simulate the group dynamics of distributed creativity (Sawyer & DeZutter, 2009). In 2002, at MIT’s Artificial Intelligence Lab, James McLurkin developed a robotic orchestra with 40 robots, each with a sound synthesizer chip (see Figure 2). The robots worked together to make collective decisions about how to split a song into parts, so that each robot would know which part to play on its sound chip. This simulated orchestra didn’t need a conductor or an arranger to play together; that would have been centralized cognition, and McLurkin’s orchestra was a classic example of distributed cognition.

Figure 2. James McClurkin’s Robotic Orchestra

In 2003 at Sony’s Computer Science Lab in Paris, a second virtual orchestra performed. Eduardo Miranda had developed a virtual orchestra with 10 computerized performers. But rather than perform an existing score, Miranda used the theories of distributed cognition to have them collectively create their own original score. Each player was programmed to be able to generate a simple sequence of musical notes. But more important, each player was programmed to listen to the other players, to evaluate their novel sequences, and to imitate some of them with variations. Miranda then left his virtual orchestra to “rehearse” for a few days; when he came back, the orchestra had produced haunting melodic streams. This was distributed creativity; the melodies were created by a group of 10 virtual players, independent agents that worked together to create (Huang, 2003).

Creativity researchers have discovered that creative work often occurs in collaborative social settings. These collaborative orchestras are based on these new research findings: Creative collaboration is distributed creativity.

The Lessons of Artificial Creativity

These cognitive AI systems seem to meet what’s called the standard definition of creativity: putting something into the world that’s both new and useful. Artificial creators generate new ideas, or new combinations of existing ideas, and then they express them in readable or visible form. But although these programs generate new combinations, they don’t do it like people do. None of these artificial creators simulate the cognitive processes and structures that psychologists have associated with creativity. But future efforts along these lines, if they were guided by interdisciplinary teams of psychologists and computer scientists, could help us explain creativity. First, programs could be developed to simulate and test different theories of the incubation stage—when your subconscious mind is bouncing around ideas and concepts in your brain. Does incubation occur by random combination, or in some sort of guided way? We could try simulating both in an artificial creator, and observe the differences in behavior. Second, programs could be developed to explore in detail how concepts are structured, stored, and combined—again, by simulating each theory in a computer program and then comparing the results.

Artificial creators also teach us by virtue of what they leave out. None of these programs models emotion, expression and communication, motivation, or the separate generation and evaluation stages distinctive of human creativity. None of them model the collaborative dynamics of creative groups.

Criticism 1. Cognition and Emotion

When AI first emerged, in the 1950s and 1960s, it led to the idea that the human mind might be a computational device. But artificial creators only simulate the cognitive functions of the mind: rational, analytic, linear, propositional thought. These programs are especially helpful in understanding the cognitive components of creativity: analogy, metaphor, concepts and conceptual spaces, sequential stages, and transformative rules. But artificial creators don’t model emotion, motivation, or irrationality. Artificial creators focus on those elements of human creativity that rely on cognition.

Criticism 2. Problem Finding

Computers are useless. They can only give you answers.

—Pablo Picasso

Creativity researchers have found that the key to surprising and exceptional creativity is asking a good question. Creativity researchers call this problem finding. But artificial creators never have to come up with their own questions; their users decide what problems are important, and then they ask the question. Today, this has a clever name: “prompt engineering.” (I recently heard someone call this “prompt architecture.”) Artificial creators simulate problem solving rather than problem finding.

This doesn’t bother some AI researchers, because they believe that creativity isn’t much different from everyday problem solving. They argue that most creativity really is problem solving; after all, most of us don’t experience problem-finding insights on a regular basis, and even genius-level creators don’t discover new problems every day. But most creativity researchers believe that problem finding can’t be explained as a type of problem solving. Coming up with good questions requires its own explanation.

Criticism 3. Creativity Can’t Be Algorithmic

Dr. Teresa Amabile, a creativity researcher at Harvard, long ago argued that to be creative, a task can’t be algorithmic. As she described it, an algorithmic task is one where “the solution is clear and straightforward,” and in contrast, a creative task is one “not having a clear and readily identifiable path to solution.” To solve a creative challenge, a new algorithm has to be developed. As an example, Amabile said that if a chemist applied a series of well-known synthesis chains for producing a new hydrocarbon complex, the synthesis would not be creative even if it led to a product that was novel and appropriate; only if the chemist had to develop an entirely new algorithm for synthesis could the result be called creative. Amabile’s (1983) definition of creativity excludes the possibility of computer creativity, because computer programs are algorithmic by definition.

AI researchers would respond that this definition is unfairly limited; after all, it seems that many human creative products result from algorithmic processes. Why shouldn’t we agree that the chemist’s new hydrocarbon complex is creative? And it raises a critical definitional problem: How can we know which mental processes are algorithmic, and which ones are truly creative? After Picasso and Braque painted their first cubist paintings, were all of the cubist paintings they did afterward just algorithmic? After Bach composed his first minuet, were all of his later minuets just algorithmic? Most of us wouldn’t be satisfied with such a restrictive definition of creativity. Still, it seems that Amabile is on to something. If an algorithm tells you what to do—if you follow a set of existing rules to create—most of us would agree that’s less creative than if you come up with something without using existing rules, or if you invent a whole new algorithm.

Criticism 4. No Selection Ability

Although these programs generate many novel outputs, the evaluation and selection is usually done by a person. But creativity researchers have discovered that when people create, evaluation is just as critical as generation. For example, many creative people claim that they have a lot of ideas, and simply throw away the bad ones. And researchers have discovered that creators with high productivity are the ones who generate the most creative products.

Lenat’s AM math theorem program generates a lot of ideas that mathematicians think are boring and worthless (Boden, 1999, p. 365). But AM doesn’t have to select among its creations; Lenat himself painstakingly sifts through hundreds of program runs to identify those few ideas that turn out to be good ones.

David Cope, EMI’s developer, carefully listens to all of the compositions generated by EMI, and selects the ones that he thinks sound most like the composer being imitated. EMI itself has no ability to judge which of its compositions are the best. When EMI is trying to imitate Bach, Cope said that one in four of the program runs generates a pretty good composition. However, Bach is easier for EMI to imitate well than some other composers; the ratio for Beethoven is one good composition out of every 60 or 70 (Johnson, 1997).

Many of us think that creativity is grounded in an unconscious incubation stage, leading to a new idea in a moment of insight. We tend to neglect the hard conscious work of evaluation and elaboration. Artificial creators show us how important evaluation and elaboration really are. Evaluation often goes hand-in-hand with the execution and elaboration of an idea. When people do this, they use the tools of their medium. Painters work with brushes and canvas; composers sit at the piano and experiment with different phrases and chords. Most creativity is embodied; it requires engagement with the physical world. But artificial creators never “execute” in the embodied way that a human creator does—hands-on work with paints and brushes, or trying out a melody on a piano to see how it works.

Because artificial creators don’t evaluate and execute their own creations, they implicitly assume that generating a product is what creativity is about. But creativity is an iterative process of action in the world. Evaluation, elaboration, and physically making things play central roles in human creativity. Artificial creators can simulate idea generation, but these are only half of human creativity; evaluation, selection, and execution are equally important.

Criticism 5. What About the Development Process?

The programmers that develop artificial creators rarely analyze their own debugging and development process. But typically, early versions of a program produce many unacceptable or uncreative outputs, and the programmer has to revise the program so that these don’t happen in later versions of the software. Only by becoming familiar with the development cycle of the program—with how the programmers sculpt and massage its behavior through successive and iterative revisions—could we really understand the role played by the human metacreator.

These early cognitive AI systems were developed by programmers who were also creatively talented in that particular domain. David Cope was a professional composer before he started EMI; Harold Cohen was a successful painter before beginning work on AARON. Only a talented musician would be able to “debug” the Bach-like compositions of EMI. A nonmusician wouldn’t have the ability to judge which versions of the program were better. Only a talented visual artist like Harold Cohen could tell which paintings by AARON were better paintings. A non-artist wouldn’t be able to select between different versions of the program and choose a promising future path for development.

When we don’t know about the development process, we don’t know how the programmer’s creative choices are reflected in the program. It’s easy to come to the incorrect conclusion that the programmer simply wrote a program one day, and out popped a novel and interesting result. Programming an artificial creator is just like any other creative process—mostly hard work, with small mini-insights throughout, and with most of the creativity occurring during the evaluation and execution stages.

Elvis Is in the Window

In the 1990s and the 2000s, only a few people had access to computer art, but people already seemed to like it as much as human creations. Audiences at EMI concerts, and gallery viewers at shows of AARON’s paintings, reacted the same way that they did to human creations. When Dr. Cope sat at the piano and played computer-composed Chopin for people, audiences responded just as they would to a human composer; they acted as if a creative being was reaching out to them through the music. When people look at an AARON painting, they instinctively try to interpret what it means—what is the artist trying to say?

Computer art raises an interesting possibility: the viewer may contribute as much to a work as the artist does. After all, people also see images of Elvis in dirty windows and think their cars have quirky personality traits. Artistic meaning isn’t only put into a work by the artist, but is often a creative interpretation by the viewer. Many influential theories of art emphasize audience reaction rather than the creator’s intention (like Stanley Fish and Wolfgang Iser).

Artificial creators aren’t very useful to creativity researchers because they’re missing several important features of the human creative process.

Evaluation. Artificial creators are not responsible for the evaluation stage of the creative process. The programmers decide when the program has discovered something interesting; many times when such a program runs, nothing interesting happens. These computer runs are discarded and never reported. In contrast, human creators have lots of ideas, and an important part of the creative process is picking the most promising ones for further elaboration.

Execution. Artificial creators generate something and that’s the end of their creative process. Their creative process is a linear one—the creative process is complete once the work is generated. But scientific studies of creativity have revealed that’s not the way humans do it. People have most of their ideas during the execution and elaboration of the work, while working with the materials of their medium. They don’t start with the idea; the idea emerges from the creative process. To explain human creativity, we need to study human action in the world. Computer artists don’t need to act in the world to create; they don’t work the same way that human artists do.

Communication. Artificial creators don’t have to communicate and disseminate their novel work to a creative community. But this stage is complex and involves immense creativity. Your work won’t go anywhere if you can’t get it in front of people. And that’s not a linear process; sharing a creative work, and seeing the audience reaction, can fundamentally transform the creative process itself. Musicians get inspiration from their listeners. Authors get ideas from their readers.

Artificial creators are interesting both for their successes and also because they show us the limitations of a linear, idea-focused theory of creativity. Artificial creators are weakest when it comes to the social dimensions of creativity, and when it comes to the embodied nature of creative work embedded in the physical world. Because they don’t have to evaluate, execute, or communicate, they don’t contain the important conventions and languages that allow communication between a creator and an audience. What goes on inside the program is nothing like the way that people do it. GenAI imitates human creativity, but it’s not creative the way humans are. That’s why I call it artificial creativity.

This column is a revision of “Computational Creativity,” a chapter in the 2012 second edition of Explaining Creativity: The Science of Human Innovation by Keith Sawyer.

References

Amabile, Teresa. The Social Psychology of Creativity. New York: Springer-Verlag, 1983.

Augusta, Ada. "Sketch of the Analytical Engine Invented by Charles Babbage, by L. F. Menabrea, with Notes by the Translator, Ada Augusta." 1842, accessed Sep. 1, 2011, http://www.fourmilab.ch/babbage/sketch.html.

Boden, M. A. (1999). Computer models of creativity. In R. J. Sternberg (Ed.), The handbook of creativity (pp. 351–72). New York: Cambridge.

Candy, L., & Edmonds, E. (Eds.). (2002). Explorations in art and technology. Berlin: Springer.

Cohen, Harold. "A Self-Defining Game for One Player." Paper presented at the Creativity & Cognition, Loughborough, UK, October 10-13 1999.

Cohen, Harold. Public Lecture. 2004. Tate Gallery, London.

Cohen, Harold. Towards a Diaper-Free Autonomy. 2007. Lecture presented at the Museum of Contemporary Art, San Diego, August 4, 2007.

Cope, D. (2001). Virtual music: Computer synthesis of musical style. Cambridge, MA: MIT Press.

Dasgupta, Subrata. Creativity in Invention and Design: Computational and Cognitive Explorations of Technological Originality. Cambridge, UK: Cambridge University Press, 1994.

Diehl, Travis. "A.I. Art That's More Than a Gimmick? Meet Aaron." The New York Times (New York), February 16 2024, C10.

Garcia, Chris. "Algorithmic Music: David Cope and Emi." Computer History Museum, 2015, accessed December 20, 2024.

Huang, Gregory T. "Machining Melodies." Technology Review, May, 2003, p. 26.

Johnson, George. "Undiscovered Bach? No, a Computer Wrote It." New York Times (New York), November 11 1997, B9, B10.

McCorduck, P. (1991). Aaron’s code: Meta-art, artificial intelligence, and the work of Harold Cohen. New York: W. H. Freeman.

Meehan, J. "Tale-Spin." In Inside Computer Understanding: Five Programs Plus Miniatures, edited by R. C. Schank and C. J. Riesbeck, 197-226. Mahwah, NJ: Erlbaum, 1981.

Newell, Allen, J. C. Shaw, and Herbert A. Simon. "The Processes of Creative Thinking." In Contemporary Approaches to Creative Thinking, edited by H. E. Gruber, G. Terrell and M. Wertheimer, 63-119. New York: Atherton Press, 1962.

Sawyer, R. Keith. Explaining Creativity: The Science of Human Innovation (2nd Ed.). New York: Oxford, 2012.

Sawyer, R. Keith, and Stacy DeZutter. "Distributed Creativity: How Collective Creations Emerge from Collaboration." Psychology of Aesthetics, Creativity, and the Arts 3, no. 2 (2009): 81-92.

Sharples, Mike, and Rafael Pérez y Pérez. Story Machines: How Computers Have Become Creative Writers. London: Routledge, 2022.

Turner, S. R. The Creative Process: A Computer Model of Storytelling and Creativity. Mahwah, NJ: Erlbaum, 1994.